Lenovo

Efficient Inference of Large Language Models on Single GPU

Large Language Models (LLMs) like Llama 3.1-70B model excel in natural language processing tasks but require substantial computational and memory resources, typically necessitating multiple high-memory GPUs (e.g., NVIDIA A100). This poses a barrier to users with limited resources. The key challenge is efficiently performing inference on large-scale LLMs using a single GPU (e.g., NVIDIA A40 or RTX 4090) while maintaining acceptable model accuracy and throughput, thereby making these models more accessible and widely applicable. To address this challenge, the student team aims to achieve the following objectives: 1. Research on Model Compression: -Systematically evaluate the impact of model compression techniques on LLM accuracy and inference performance during both the prefill and decode stages, establishing comprehensive benchmarks. 2. Compression and Long-Sequence Inference Optimization of LLMs: -Apply selected compression techniques to the Llama 3.1-70B model, ensuring the model's accuracy loss is kept within 5%. -Support long-sequence inputs (e.g., ≥10k tokens) by employing techniques like KVcache optimization, activation quantization, and offloading to enhance inference efficiency and throughput. -Through the optimization techniques described above, achieve at least one of the following targets: o Target 1: Implement inference of the Llama 3.1-70B model on an A40 (48GB) GPU, supporting inputs up to 10k tokens, with a throughput of ≥10 tokens/s, and ensure the model accuracy loss is within 5%. o Target 2: Implement inference of the Llama 3.1-70B model on an RTX 4090 (24GB) GPU, supporting context inputs of at least 1,000 tokens, with a throughput of ≥10 tokens/s, ensuring the model accuracy loss is within 5%. The student team will advance the project according to the following milestones: 1. Technical Selection and Evaluation: -Investigate the principles and implementations of model compression techniques like quantization, calibration, sparsification, and pruning. -Experiment systematically with various compression techniques to assess their impact on model accuracy and inference performance. -Explore new ideas and methods to enhance existing technologies. 2. Implementation of Compression and Optimization: -Apply the selected model compression methods to the Llama 3.1-70B model to reduce model size and memory footprint. -Test the quantized model to ensure that the accuracy loss remains within 5%, measured using standard benchmarks such as Perplexity (PPL) on datasets like WikiText2, and task-specific benchmarks like MMLU. -Evaluate the inference speed and resource utilization of the quantized model on the target hardware to inform the next stage of optimization. 3. Optimization of Long-Sequence Inference Performance: -Develop and implement techniques like KV cache optimization, activation quantization, and offloading to enhance the inference efficiency of the compressed model for long-sequence inputs on a single GPU (A40 or RTX 4090). -Enhance the throughput of long-sequence inference, aiming for at least 10 tokens/s. 4. System Integration: -Integrate the compression and long-sequence optimization techniques into a unified inference workflow, ensuring system stability and reliability. -Adjust model and system parameters for the target hardware (A40 and RTX 4090) to maximize performance. -Ensure the workflow is modular and easily integrable into mainstream AI frameworks. 5. Performance Verification and Technical Analysis: -Test the final optimized model in an actual hardware environment to verify if it meets the expected performance targets. -Organize experimental results and analyze the impact of different techniques on model performance. -Prepare a technical report evaluating the performance and applicability of each technique, providing recommendations for future research.

Faculty Adviser(s)

Radha Poovendran, Electrical & Computer Engineering

Related News

Mon, 10/13/2025 | UW Mechanical Engineering

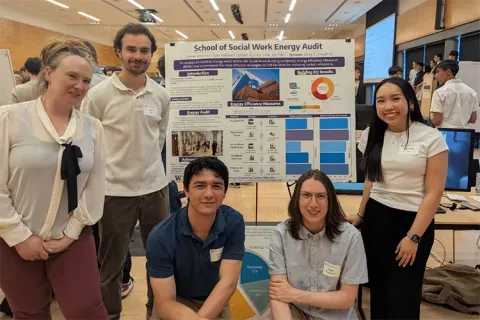

Capstone collaboration leads to award

An ME capstone team received first place for its energy audit of the UW School of Social Work building.

Thu, 07/17/2025

UW engineering students develop smart ballot solution

UW engineering students develop smart technology solution to improve ballot collection for Snohomish County.

Mon, 07/07/2025 | UW Mechanical Engineering

Capstone creations

Students displayed innovative capstone design projects at the 2025 expo.

Fri, 09/20/2024 | UW Civil & Environmental Engineering

Smarter irrigation for a greener UW

A new project combines satellite data with ground sensors to conserve water and create a more sustainable campus environment.