RealNetworks

Quantifying Bias In AI: Detecting Bias Along the Machine-Learning Pipeline

The growth of face recognition (FR) technology has been accompanied by consistent assertions that demographic dependencies could lead to accuracy variations and potential bias. For over a decade, the tech industry has anticipated the integration of computer-vision into the human / machine interface to enhance automation. As it turned out, the early traction for FR comes from security and surveillance applications, rightfully prompting dystopian fears. While engineers have benefited from the availability of AI and machine learning tools, allowing them to train their models to ever higher accuracy, the fairness and ethics of their algorithms have often been an afterthought. This project aims to develop a tool set to measure and reduce bias in AI training sets, test sets, and resulting models. Further, its goals include practical Tech Policy recommendations for the industry regarding bias of AI models.

Faculty Adviser(s)

Arindam Das, Electrical & Computer Engineering

Related News

Mon, 10/13/2025 | UW Mechanical Engineering

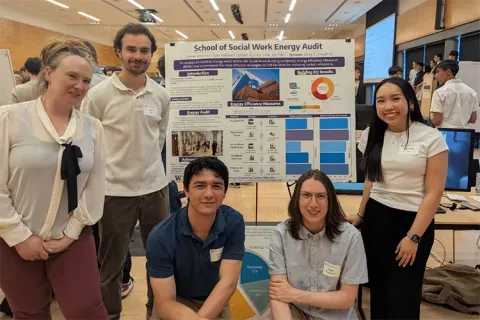

Capstone collaboration leads to award

An ME capstone team received first place for its energy audit of the UW School of Social Work building.

Mon, 07/07/2025 | UW Mechanical Engineering

Capstone creations

Students displayed innovative capstone design projects at the 2025 expo.

Fri, 09/20/2024 | UW Civil & Environmental Engineering

Smarter irrigation for a greener UW

A new project combines satellite data with ground sensors to conserve water and create a more sustainable campus environment.

Mon, 09/09/2024 | UW Mechanical Engineering

Testing an in-home mobility system

Through innovative capstone projects, engineering students worked with community members on an adaptable mobility system.