Good grips

UW researchers are taking on one of the greatest challenges in robotics: the ability to grasp and manipulate objects with superhuman dexterity.

By Ed Kromer

Have you ever considered how you pluck an egg from its carton? Retrieve a coin from a sofa cushion? Grab a loaf of bread? Screw in a wing nut? Pull out a weed? Catch a Frisbee? Tie a bow knot? Cut with scissors? Pour from a bottle?

Most of us perform countless everyday tasks with our hands without a second thought. These endlessly useful appendages, and the intricate sensory system that guides them, have been shaped by 60 million years of evolution.

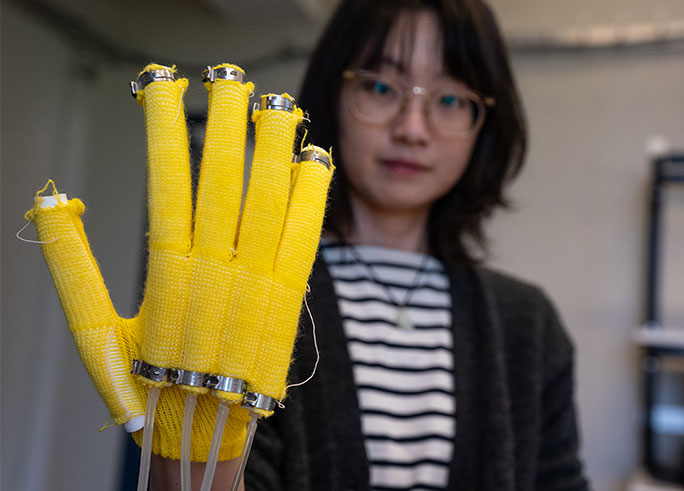

A student directs an Alt-Bionics robotic hand to gesture, grasp and manipulate in the UW Robotics Lab. Dennis Wise/University of Washington

Robots have far less history. The ability to grasp and manipulate objects with dexterity — the key to their utility in industrial, healthcare and household applications — remains one of the biggest challenges faced by designers and developers.

In robotics, the holy grail is a healthy grip.

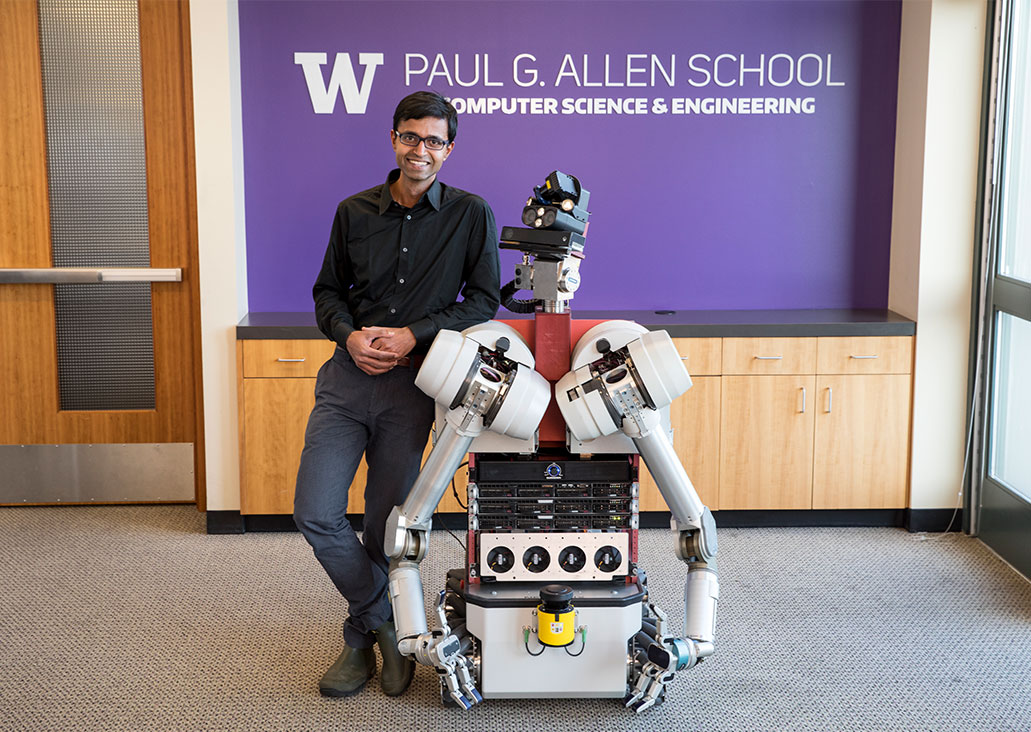

“We forget how complicated these physical manipulation tasks are,” says Siddhartha Srinivasa, a professor in the Paul G. Allen School of Computer Science & Engineering. “You have to be simultaneously forceful and delicate.”

Researchers around the UW College of Engineering are working to find that balance, refining the capacity of robots to grasp with brute strength, delicate finesse, fine precision, preternatural dexterity — or some combination of each.

It starts with influential veterans such as Srinivasa, founder of the UW Personal Robotics Lab and Amazon’s Robotics AI organization, and Allen School Professor Dieter Fox, founder of the UW Robotics and State Estimation Lab and the NVIDIA Seattle Robotics Lab — and now leading a new initiative at the Allen Institute for Artificial Intelligence. Their fundamental contributions to motion planning, perception and machine learning have empowered robots to perform complicated manipulation tasks in real-world environments.

They are joined by a new generation of brilliant roboticists who are approaching the field’s enduring puzzle from multiple disciplines and with fresh ideas.

Grip that doesn’t slip

Xu Chen, an associate professor and the Bryan T. McMinn Endowed Research Professor in mechanical engineering, began in robotics by building a double-armed specimen that can play chess and solve a Rubik’s cube autonomously.

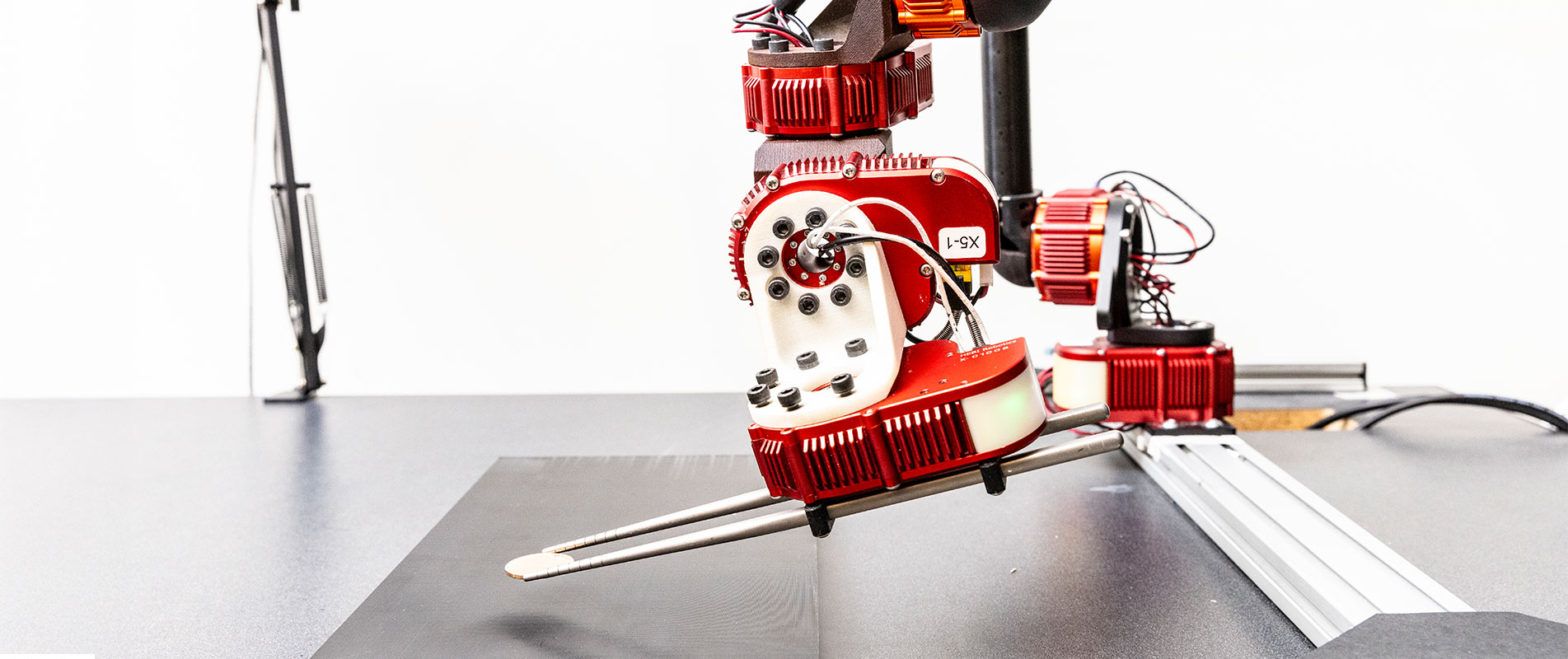

Xu Chen uses photographic sensors to predict and prevent slippage, creating a more stable robotic grip for light games and heavy industrial tasks. Dennis Wise/University of Washington

These feats were accomplished with feedback control algorithms that integrate visual and tactile data. And Chen began adapting this technology for industrial applications through the Boeing Advanced Research Collaboration (BARC) and the Mechatronics, Automation and Control Systems (MACS) Laboratory, both of which he directs.

“Industrial manufacturing requires great precision,” Chen says. “For that, the visual and the tactile need to work together.”

His group has developed a robot with parallel grippers, guided by external cameras producing three-dimensional images, that deftly removes and inspects complex aircraft engine blades for the tiniest blemish. It can do this more quickly, accurately and tirelessly than the sharpest of humans.

Another project integrates visual and tactile data to predict and prevent slippage. Tiny cameras mounted behind silicon sensors on robotic grippers capture the minute deformations that appear when an object begins to slip from its grasp. A machine learning algorithm processes the resulting entropy and its rate of change, then applies that feedback to correct the grip in real time.

“For many robots, precision isn’t there yet,” says Chen, who receives support from the UW CoMotion Innovation Gap Fund. “So, it’s scientifically intriguing to explore how we can apply precision control to robotics.”

Tactile textiles

Visual sensing works well in many industrial applications, but it can be difficult to scale up or down to guide more nuanced robotic graspers.

Yiyue Luo’s advanced tactile sensors, woven into wearables or worked onto robotic grippers, can accelerate imitation learning and policy training. Ryan Hoover

Enter Yiyue Luo, an assistant professor of electrical and computer engineering. In her Wearable Intelligence Lab, Luo is developing networks of tiny tactile sensors that are soft, flexible, lightweight and can conform to uneven shapes. Perfect, Luo says, for “complex geometries that would be super hard for conventional visual sensors.”

Like human bodies. Or robot hands.

The initial application of this technology is in textiles that can monitor physical health, sense body activity or support rehabilitation, as demonstrated by the candy-colored knit sweaters, sleeves and gloves displayed in Luo’s lab. These smart garments are fabricated by an automated digital knitting machine — think a 3D printer but more complicated — from natural fibers that are imperceptibly coated in sensors. These sensors enable recording, monitoring and learning of human-environment interactions.

It was not a stretch for Luo to extend the sensors’ utility to robot-environment interactions. The technology — woven into gloves or worked onto robotic grippers — has the potential to accelerate imitation learning and policy training. And it promises to advance remote-control robotics (a.k.a. teleoperation), allowing a human conductor to “feel what the robot is feeling,” she adds.

Luo is open sourcing her tech and data to help advance the development of dexterous robotic grasping that may, one day, exceed human touch.

“It’s not hard at this point to capture tactile information,” says Luo. “But knowing the best way to use this information is more difficult.”

Related story

The Wearable Intelligence Lab is developing AI-enhanced clothing that can guide its wearer to move more adroitly and perform tasks more efficiently.

How to train your robot

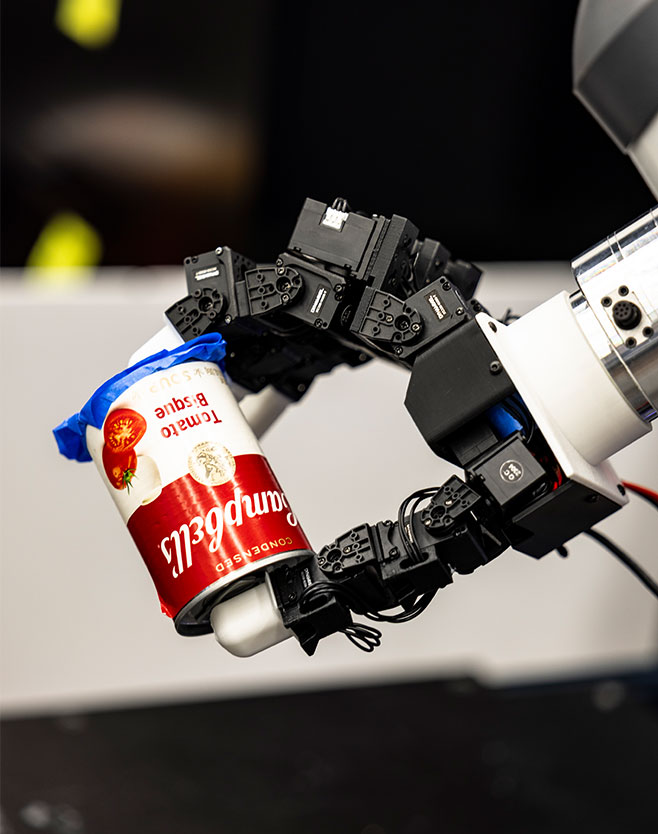

Abhishek Gupta, an assistant professor of computer science and engineering, is working on this very thing. Gupta creates learning-based algorithms that help robots handle unfamiliar objects and unpredictable tasks in everyday spaces such as homes and workplaces.

Abhishek Gupta is developing functional grasping, enabling robots to perform complex tasks in real-world settings. Dennis Wise/University of Washington

This work encompasses a keen interest in a higher-order challenge of robotics known as “functional grasping.”

“The idea is that you can either grasp to grasp or you can grasp to use. These are not the same,” Gupta says. “If I want to grasp something with the intent of performing a task downstream, what is the best way to do it?”

The task can dictate the optimal approach, grip and force on an object.

Optimizing manipulation is a key focus of Gupta’s Washington Embodied Intelligence and Robotics Development (WEIRD) Lab, where his algorithmic system allows a robot to learn from limited human demonstrations and by trial and error. Like young children, only faster.

This work has spanned parallel jaw grippers for warehouse picking, packing and extracting; robotic chopsticks for meticulous grasping; and a three-fingered robotic “hand” that approaches human dexterity in maneuvering household items in the UW Robotics Lab’s test kitchen.

Gupta’s next frontier is dexterous grasping in the most real-world environment of all: clutter. “Grasping has seen a lot of progress in the last few years,” he says. “But grasping in clutter is a pretty unsolved problem. We’re very much in its infancy.”

“I like to focus on problems that seem impossible to solve. I want robots to do what human’s can’t — superhuman things.”

Toward the superhuman

While roboticists chip away at different facets of the great grasping challenge, there’s a bigger question to consider: how much more can be achieved by moving beyond mimicry of the human hand?

After all, our hands are just one evolutionary outcome — remarkably versatile, but not necessarily the best possible design for every task. Starting with the problem, rather than existing anatomy, could open new paths for innovation.

Srinivasa envisions general-purpose robots equipped with a set of interchangeable graspers, tools designed to work in harmony with intelligent software and complex learning algorithms to take on a wide range of challenging tasks.

This mindset is already shaping real-world advances.

In the Personal Robotics Lab, for instance, Srinivasa and his collaborators are developing a robot that can feed a person with limited mobility — deftly handling everything from sushi to spaghetti to syrah. Another operates chopsticks with surgical precision for infinitely delicate tasks. And they are designing next-generation robots that may someday be capable of round-the-clock work in complex and extreme environments, like maintaining the engine room of a nuclear submarine.

“I like to focus on problems that seem impossible to solve,” Srinivasa says. “I want robots to do what human’s can’t — superhuman things.”